Build Linear Regression from Scratch (No Libraries, Full Math + Code)

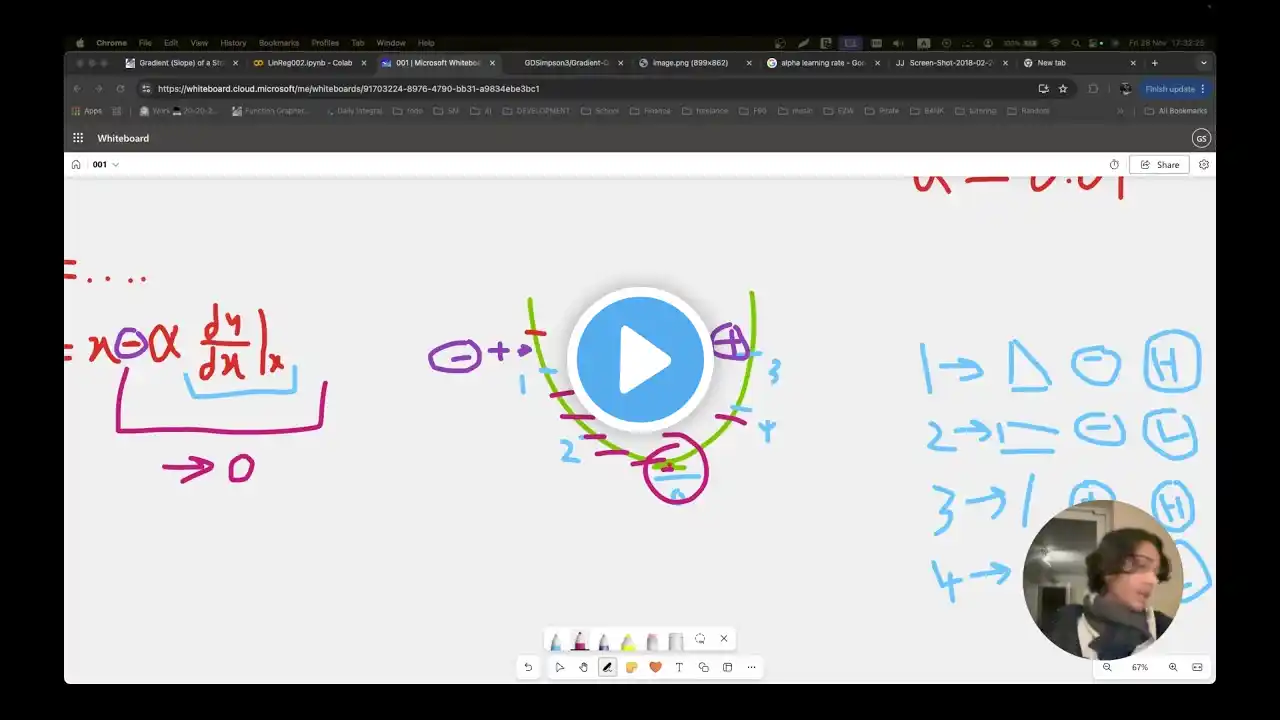

Implementing a Linear Regression from Scratch VIA Applying the Gradient Descent on our Loss function (which will be the Mean Squared Error). This video covers everything, from the concept of Residual, Loss functions, the MSE, The gradient Descent Simplified, The Gradient Descent on a Bivariate Function, Partial Derivatives and Python implementation (in Google Collab) Resources: Github Repo (everything is here): https://github.com/GDSimpson3/LinearR... Dataset: https://raw.githubusercontent.com/GDS... Notebook: https://github.com/GDSimpson3/LinearR... Board: https://github.com/GDSimpson3/LinearR... Tools used: Google Colab Microsoft Whiteboard OBS AI Community / discord CHAPTERS 0:00 Introduction 0:47 Getting our Dataset 5:00 Visualise the Data 8:15 Regression Line Concept 10:20 Loss Functions 10:30 Residual 12:16 Mean Squared Error 20:16 The Gradient Descent on a Simple Quadratic 29:56 The Gradient Descent on our Bi Variate Function 32:19 Partial Derivatives Explanation 36:41 Differentiating a Summation 38:12 Partial Derivatives of our MSE 41:15 Gradient Descent with Partial Derivatives 46:55 Implement in Code 47:05 Drawing a line 50:26 Implementing the MSE 57:22 Partial Derivatives implementation 01:00:09 Gradient Descent Implementation 01:05:12 Visualise the MSE 01:07:35 Pitfalls of the Gradient Descent 01:08:56 Extra TAGS #linearregression #regression #AI #ML #2025 #machinelearning #beginner #residual #statistics #partialderivatives #gradientdescent #firstprinciples #implementation #python #jupyterlab #gradients #iteration #convergence #tutorial #alpha #learningrate