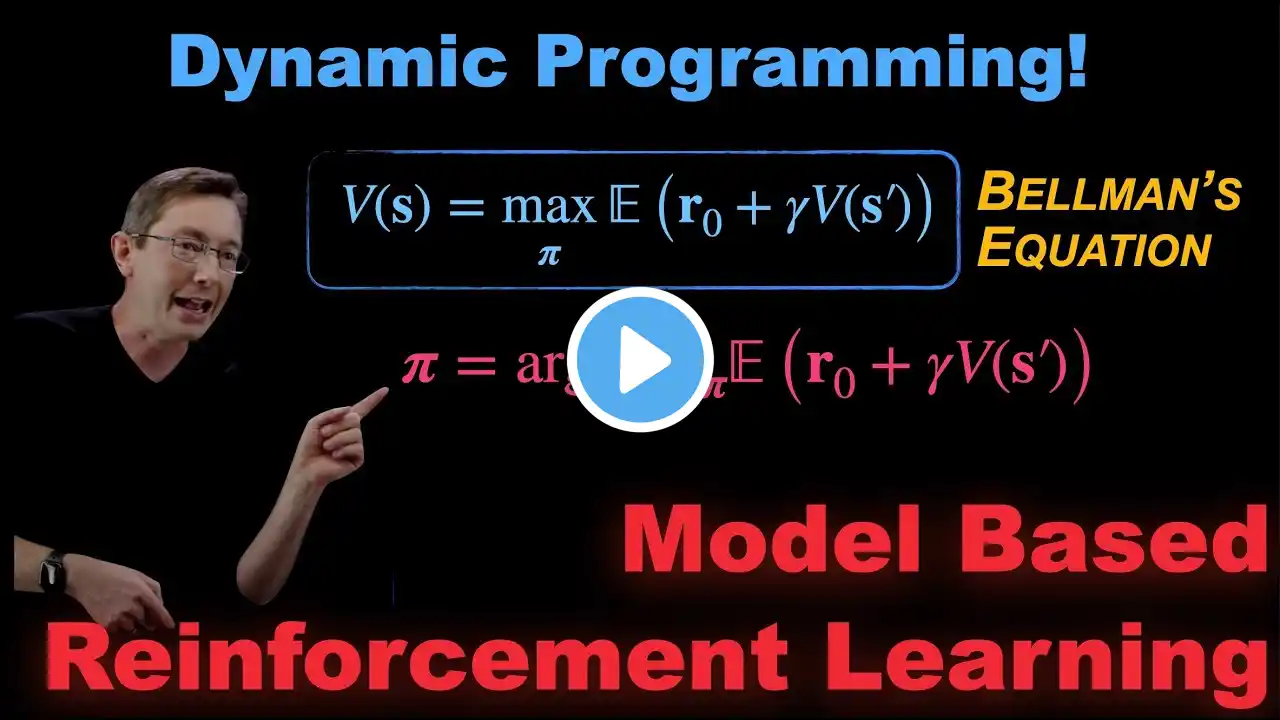

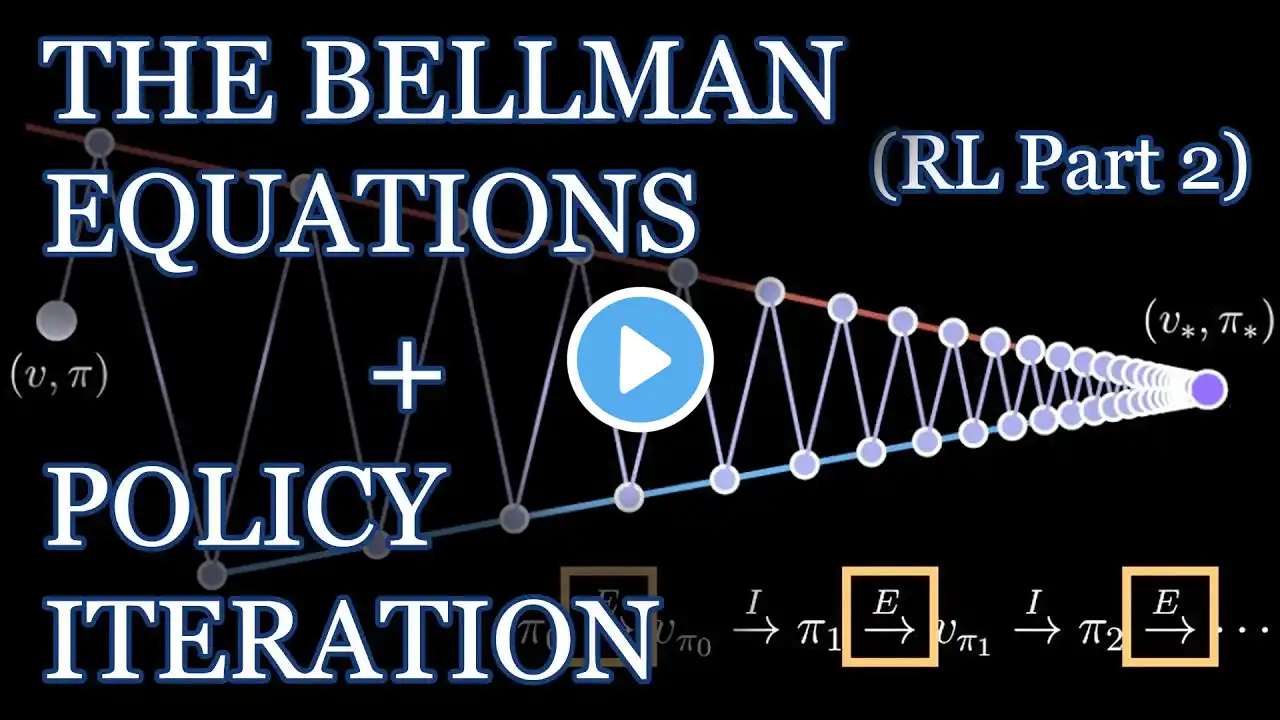

Bellman Equations, Dynamic Programming, Generalized Policy Iteration | Reinforcement Learning Part 2

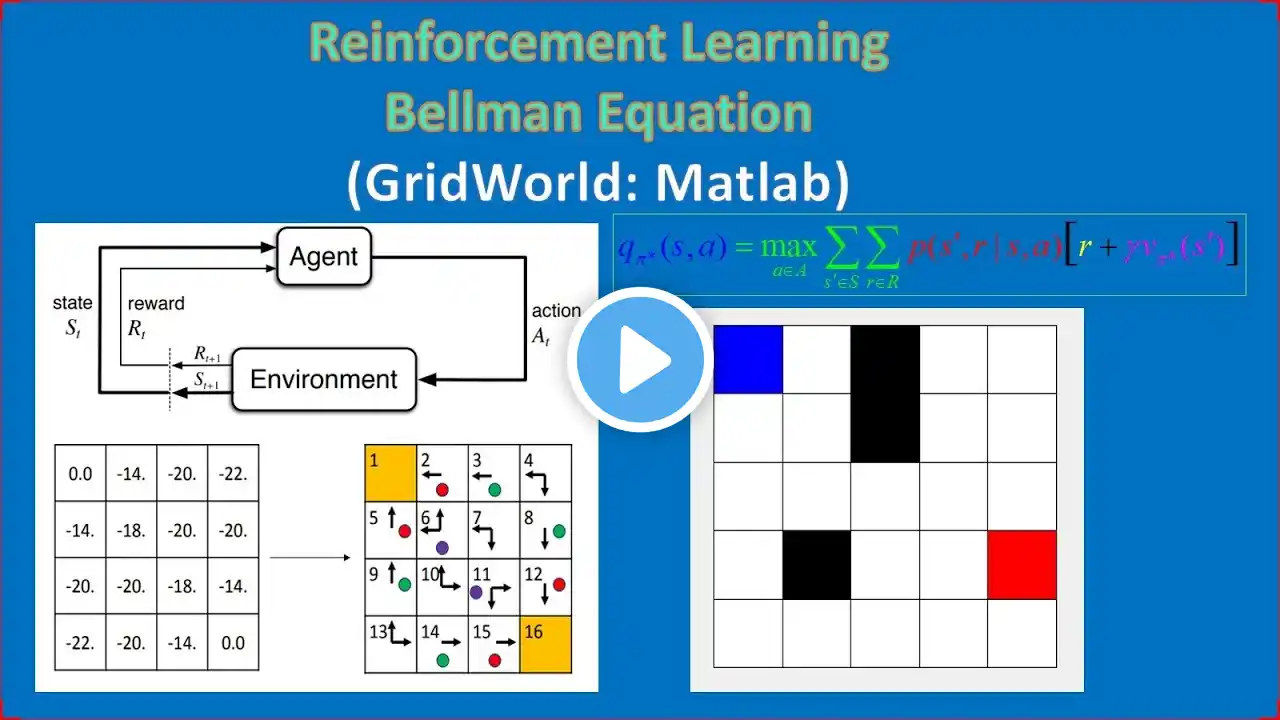

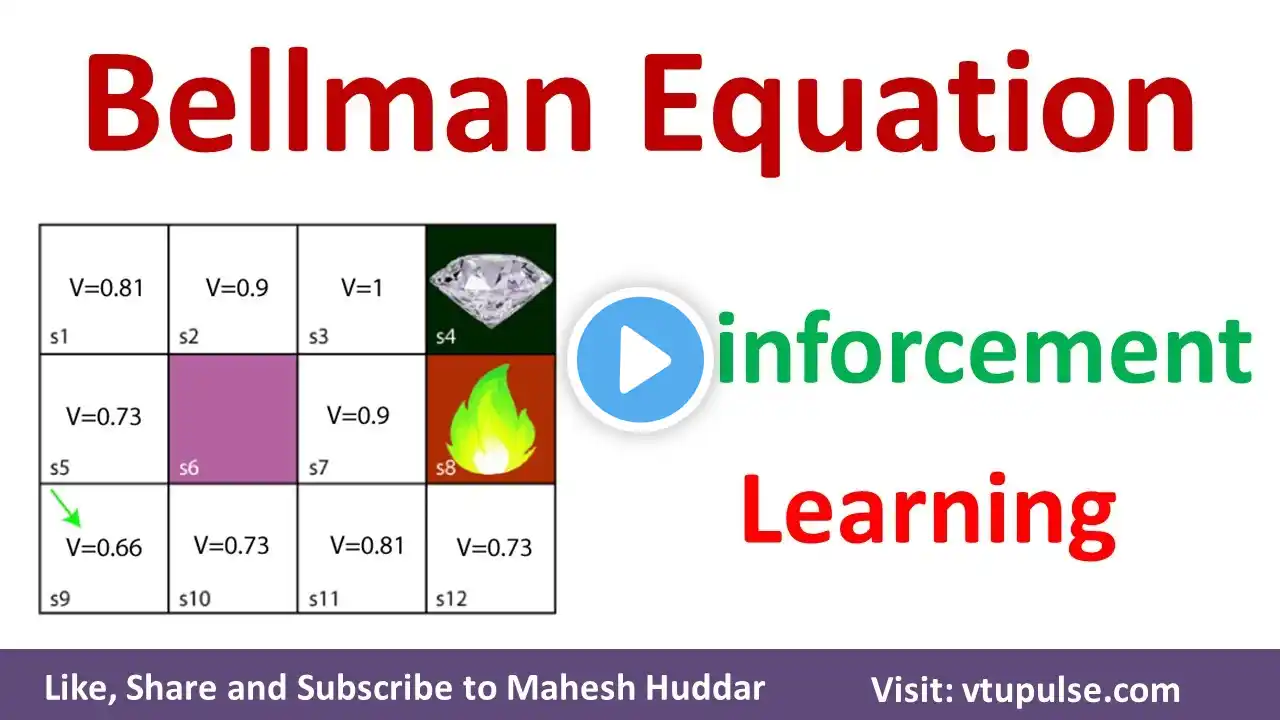

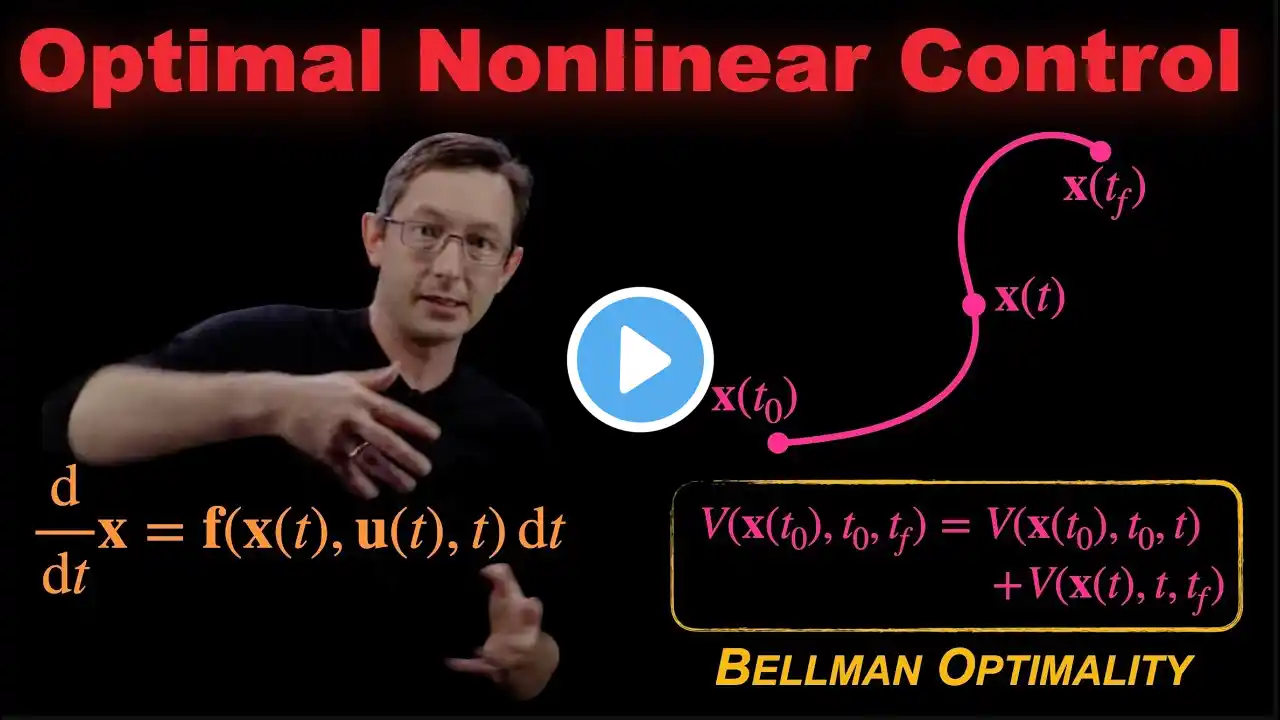

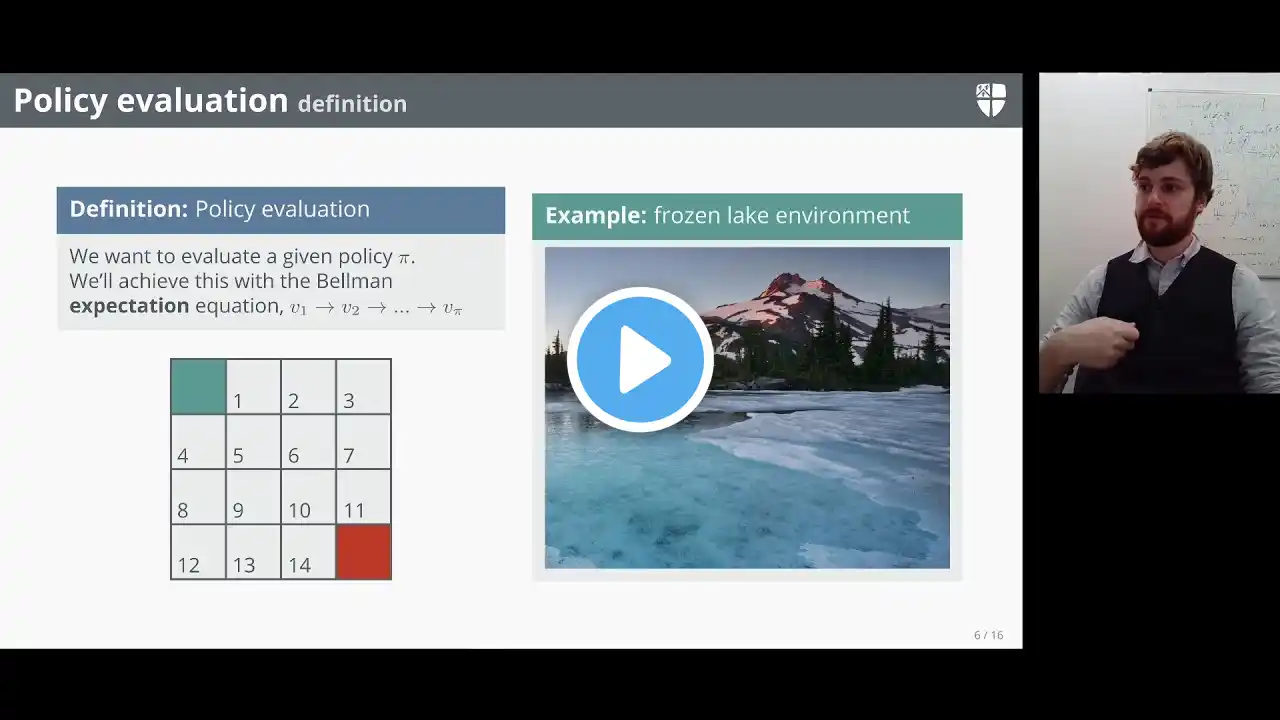

The machine learning consultancy: https://truetheta.io Join my email list to get educational and useful articles (and nothing else!): https://mailchi.mp/truetheta/true-the... Want to work together? See here: https://truetheta.io/about/#want-to-w... Part two of a six part series on Reinforcement Learning. We discuss the Bellman Equations, Dynamic Programming and Generalized Policy Iteration. SOCIAL MEDIA LinkedIn : / dj-rich-90b91753 Twitter : / duanejrich Github: https://github.com/Duane321 Enjoy learning this way? Want me to make more videos? Consider supporting me on Patreon: / mutualinformation SOURCES [1] R. Sutton and A. Barto. Reinforcement learning: An Introduction (2nd Ed). MIT Press, 2018. [2] H. Hasselt, et al. RL Lecture Series, Deepmind and UCL, 2021, • DeepMind x UCL | Deep Learning Lecture Ser... SOURCE NOTES The video covers the topics of Chapter 3 and 4 from [1]. The whole series teaches from [1]. [2] was a useful secondary resource. TIMESTAMP 0:00 What We'll Learn 1:09 Review of Previous Topics 2:46 Definition of Dynamic Programming 3:05 Discovering the Bellman Equation 7:13 Bellman Optimality 8:41 A Grid View of the Bellman Equations 11:24 Policy Evaluation 13:58 Policy Improvement 15:55 Generalized Policy Iteration 17:55 A Beautiful View of GPI 18:14 The Gambler's Problem 20:42 Watch the Next Video!