ML Essentials Explained: Functions, Loss, and Gradient Descent + Iterative Live Demos - With Code

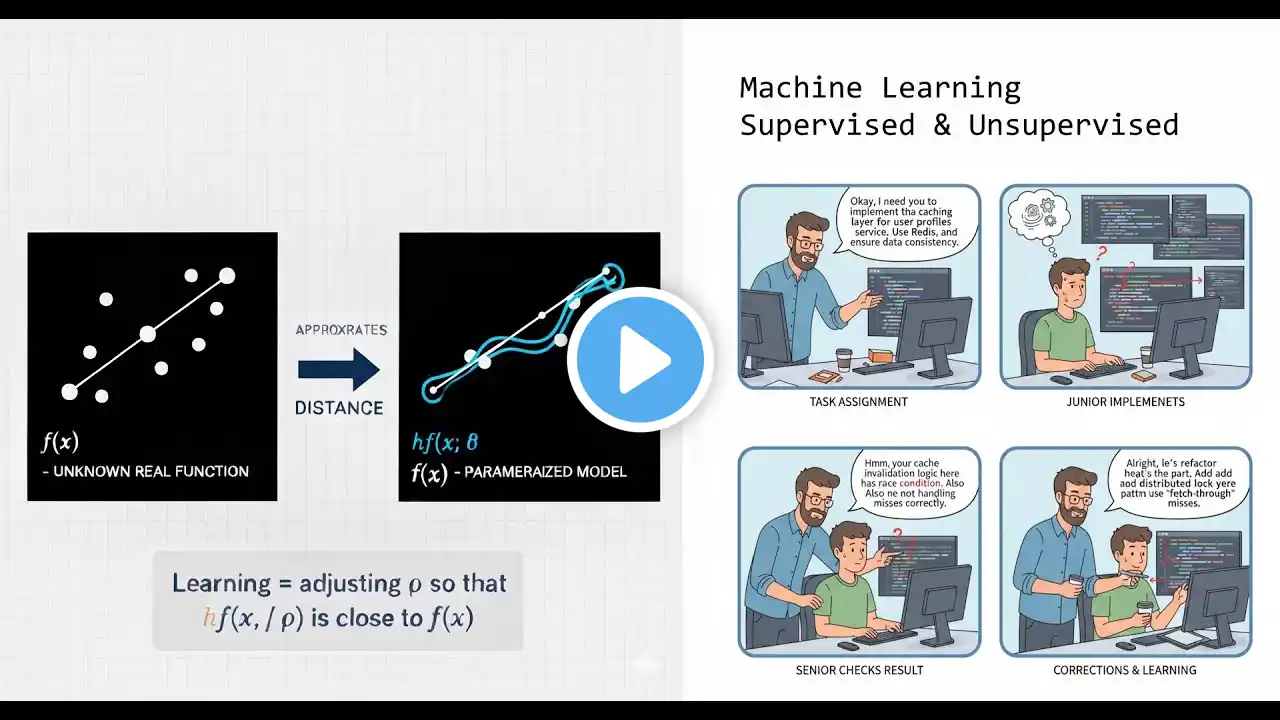

Unlock the core ideas that power modern Machine Learning. In this video, we break down ML’s foundation: function approximation, supervised vs. unsupervised learning, and the optimization engine behind nearly every model—Loss Functions and Gradient Descent. To make the concepts real, we walk through two hands-on demos using NumPy and Matplotlib: • *Linear Regression* for supervised learning (predicting house prices) • *K-means Clustering* for unsupervised learning (discovering patterns in unlabeled data) By the end, you’ll understand not just the “how,” but the **why**—why loss functions measure model quality, why gradient descent converges, and why ML is ultimately about approximating unknown functions. Full code demos will be available on GitHub https://github.com/josedacruz/tensorf... Connect with the author: José Cruz --- ⏱️ *Timestamps* 00:00 What Machine Learning Really Is (Seniors and Juniors) 05:46 Example with House Prices (Error/Loss Function) 12:32 Iterative Example for Function Approximation 15:09 Iterative Example for Gradient Descent 20:36 Demo: Linear Regression in NumPy 26:41 Demo: K-means Clustering in NumPy 32:15 Core Machine Learning Algorithms Overview 34:49 A complete code example --- #MachineLearning #DataScience #GradientDescent #LinearRegression #Kmeans #Python #NumPy #MLTutorial #AI #LossFunction #CostFunction #Optimization #CalculusForML #MachineLearningAlgorithms #LearnML #MLforBeginners #AIforEveryone #CodingLife #TechEducation #HandsOnML #DataAnalytics #DeepLearning #DataViz #MLinProduction #TechTalk #SoftwareEngineering #Developer #Programming #100DaysOfCode #MLFundamentals #Statistics #AppliedMath #ModelTraining #DataMining #CodingTutorial