MASTER Statistical Inference: Hypothesis Testing, P-Value, & Confidence Intervals Explained

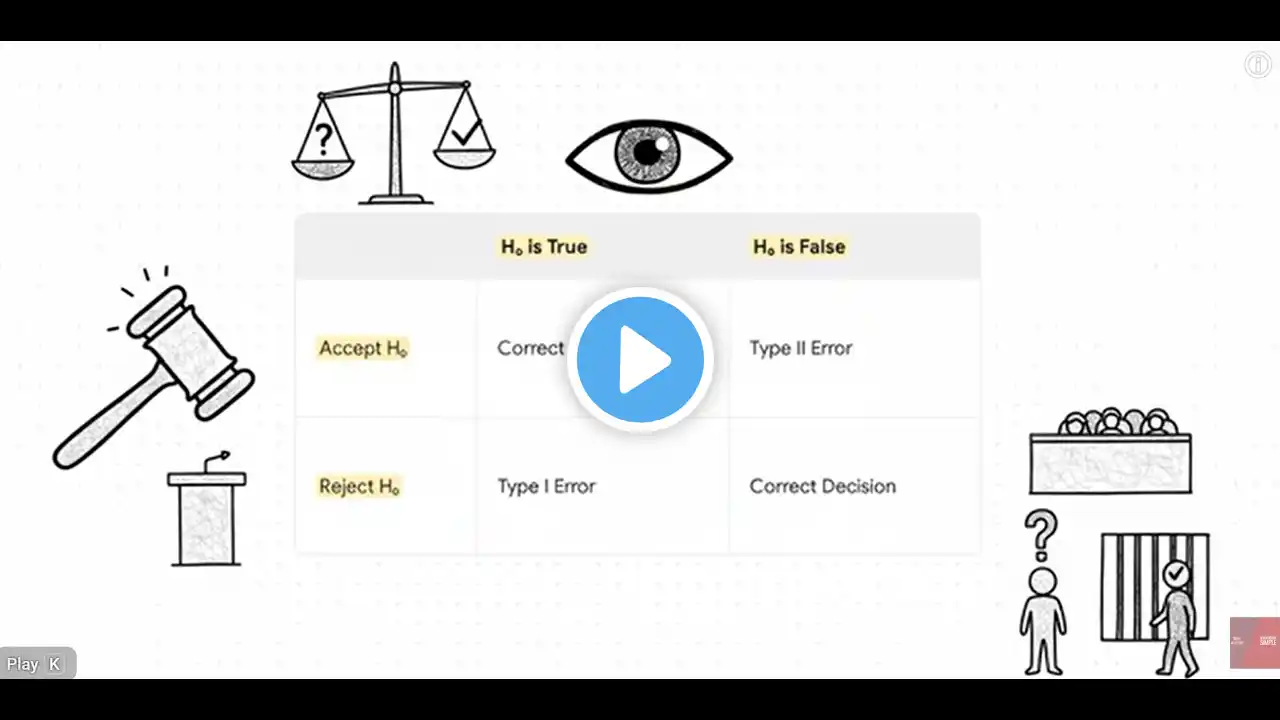

Welcome to the definitive guide on Statistical Inference, the fundamental process that allows us to make powerful statements about an unobserved true population parameter based on observed sample statistics. This comprehensive lesson breaks down the two main methods used in inference: Hypothesis Testing (using the p-value) and Estimation (using the Confidence Interval). Part 1: Understanding Hypothesis Testing We start by detailing Hypothesis Testing, which is a data-based decision procedure designed to produce a conclusion about some scientific system. • The Statistical Hypothesis: A statistical hypothesis is an assertion or statement concerning one or more populations. • The Null Hypothesis (H 0 ): This refers to the main hypothesis we wish to test and must specify an exact value of the population parameter (like the mean or proportion). It is never a statement about the sample. • The Alternative Hypothesis (H1): This is formulated as a rejection of H0 and allows for the possibility of several values from the sample statistic. It, like H0 , must be formulated before the sample is tested. • Decision Making: We follow specific steps, including choosing a significance level (α) and selecting the appropriate test statistic. We reject H 0 if the test statistic falls into the critical region or if the computed P-value is less than or equal to the desired significant level α. Part 2: Errors and Significance Learn the four possible situations that determine whether our statistical decision is correct or in error: • Type I Error: Rejecting the null hypothesis (H 0 ) when it is actually true. The probability of committing this error is the level of significance, denoted by α. • Type II Error: Accepting the null hypothesis when it is false, also known as a false negative. The probability of this error is denoted by β. • We also cover the power of a test, which is the probability of rejecting H0 given that a specific alternative is true, computed as 1−β. Note that decreasing the probability of one type of error generally results in an increase in the probability of the other. Part 3: The P-Value and Test Statistics The P-value is a critical probability that measures the evidence against the null hypothesis provided by the sample. • P-Value Rule: We try to show that H0 is false; if the probability of the data occurring under the assumption that H0 is true is very small, it suggests H0 is false. Smaller p-values indicate more evidence against H0 . • We explore how to calculate test statistics, including the Z statistic for a single mean (z= σ/n xˉ−μ0) and when to use the t distribution when the population standard deviation is unknown. The P-value is used to determine whether to reject H0 . Part 4: Estimation and Confidence Intervals (CI) Estimation uses sample data to determine a range for an unknown population parameter. • Point Estimate: The sample mean ( x ˉ ) is the point estimate for the population mean (μ). • Confidence Interval (CI): Since point estimates are rarely exact, we use a Confidence Interval defined as the point estimate ± margin of error. • The margin of error quantifies how wrong we are allowed to be at a desired level of confidence. It is found by multiplying a critical value (like Z α/2 ) by a standard error. • We discuss specific formulas for various scenarios, including the CI for a single mean ( x ˉ ±(z α/2 ) n σ ), the difference in two means, and the CI for a single proportion (p±Zσ p ). For instance, a 95% CI for a single mean uses a Z value of 1.96 (when α=0.05). Master these concepts today and transform your understanding of statistical decision-making!